Warning: Outdated Content

This MP is from a previous version of CS 125. Click here to access the latest version.

MP6: JSON and Android

Welcome to Android! For MP6 you’ll get introduced to Android by modifying a simple app. But the core topic of the MP are actually using application programmer interfaces (APIs) and serialization. You’ll get practice using the Microsoft Cognitive Services API and on parsing data formatted in JavaScript Object Notation (JSON), a simple and widely-supported data exchange format.

MP6 is due Friday 4/13/2018 @ 5PM. To receive full credit, you must submit by this deadline. In addition, 10% of your grade on MP6 is for submitting code that earns at least 40 points by Wednesday 4/11/2018 @ 5PM. As usual, late submissions will be subject to the MP late submission policy.

1. Learning Objectives

MP6 introduces you to using integrating external APIs into your projects and to Android development. We’ll show you how to use the powerful Microsoft Cognitive Services API to analyze image data, and you’ll learn how to process the results. We’ll also introduce you to the structure of a simple Android app, and you’ll get practice modifying the UI to reflect the information provided by the Cognitive Services API. We’ll also continue to reinforce the learning objectives from previous MPs (0, 1, 2, 3, 4, and 5).

2. Assignment Structure

Unlike previous MPs, MP6 is split into two pieces:

-

/lib/: a small library that extracts certain pieces of information from the image data returned by the Microsoft Cognitive Services API. This library is the only part of the MP tested by the test suite. -

/app/: an Android app for you to use for your own interactive testing. The Android app is almost complete, but needs a few modifications from you. And, to work correctly, you need to finish the library inlib.

2.1. MP6 Image Recognition Library (lib)

The MP6 app handles uploading photos to the Microsoft Cognitive Services API. However, once the results are returned it relies on your library to extract a few pieces of "important" information:

-

The image width, height, and format: using

getWidth,getHeight, andgetFormat. -

The autogenerated image caption: using

getCaption -

Whether the image contains a dog or a cat 1: using

isADogandisACat -

Whether you’ve been rickrolled: that is, whether the photo contains Rick Astley, using

isRick.

As always, you may find our official MP6 online documentation useful as you understand what you need to do. If you believe that the documentation is unclear, please post on the forum and we’ll offer clarification as needed. To complete this part of the assignment you’ll want to review the section on JSON below.

2.2. MP6 App

The MP6 app handles obtaining images—either ones save previously on the device, captured by the camera, or downloaded from the internet. It also uploads those images to the Microsoft Cognitive Services API and updates the UI appropriately—or at least parts of it.

To complete MP6 most of the lines of code that you write will be completing the

image recognition library.

However, there are a few small changes that we encourage you to make so that the

user interface functions properly.

We’ve done a few things to get you started.

Examine finishProcessImage to see how we use the results from getWidth,

getHeight, and getFormat to update a part of the UI.

To complete the UI you’ll need to do the following things:

-

Update the caption using the result from

getCaption. You should be able to use our example of updating the description as a starting point. -

Show or hide the cat and dog icons as appropriate. You may want to look at

updateCurrentBitmapfor examples of how these icons are hidden when the current image is cleared. -

Do something when you find Rick. You do want to celebrate, right? Maybe try something like this.

2.3. Obtaining MP6

Note that we are using Android Studio for MP6. Use this GitHub Classroom invitation link to fork your copy of MP6. Once your repository has been created, import it into Android Studio following our assignment Git workflow guide.

2.4. Getting Help

The course staff is ready and willing to help you every step of the way 2! Please come to office hours, or post on the forum when you need help. You should also feel free to help each other, as long as you do not violate the academic integrity requirements.

2.5. Your Goal

At this point you should be familiar with the requirements from previous MPs. See the grading description below. However, note that for MP6 we have not provided a complete test suite. You will have to figure out how to test your Rick Astley detection yourself.

3. JSON

Object-oriented languages make it easy to model data internally by designing classes. But at times we need to exchange data between two different programs or systems, possibly implemented in different languages. That requires representing the data in a format that both systems can understand. JSON (JavaScript Object Notation) is one popular data exchange format in wide use on the internet, and frequently used to communicate with web APIs.

JSON is both simple and incredibly powerful. It is based on only two different principles, but can represent a wide variety of different data. Using the Microsoft Cognitive Services API requires understanding JSON, and completing MP6 requires that you implement several simple JSON parsing tasks.

3.1. What is JSON?

Imagine we have an instance of the following Java class:

public Person {

public String name;

public int age;

Person(String setName, String setAge) {

name = setName;

age = setAge;

}

}

Person geoffrey = new Person("Geoffrey", 38);Now image we want to send this information to another computer program: for example, from an Android app written in Java to a web application programmer interface (API) that could be written in Java, Python, or any other language. How do we represent this information in a way that is correct and complete, yet also portable.

JSON (JavaScript Object Notation) has become a popular answer to that question. While it is named after JavaScript, the language that introduced JSON, JSON is now supported by pretty much every common programming language. This allows an app written in Java to communicate with a web API written in Python, or a web application written in JavaScript to communicate with a web backend written in Rust.

Enough talk. Here’s how the object above could be represented in JSON:

{

"name": "Geoffrey",

"age": 38

}JSON has only two ways to structure data: objects and arrays.

Above you seen an example object.

Like Java, it has named variable (name, age) each of which takes on a

particular value ("Geoffrey", 38).

Here’s another example.

The following instance of this Java object:

public Course {

public String name;

public int enrollment;

public double averageGrade;

Course(String setName, String setEnrollment, double setAverageGrade) {

name = setName;

enrollment = setEnrollment;

averageGrade = setAverageGrade;

}

}

Course cs125 = new Course("CS 125", 500, 3.9);would be represented as this JSON string:

{

"name": "CS 125",

"enrollment": 500,

"averageGrade": 3.9

}JSON can also represent arrays. This Java array:

int[] array = new int[] { 1, 2, 10, 8 };would be represented using this JSON string:

[1, 2, 10, 8]We can also represent nested objects and objects with array instance variables:

public Person {

public String name;

public int age;

Person(String setName, String setAge) {

name = setName;

age = setAge;

}

}

public Course {

public String name;

public int enrollment;

public double averageGrade;

public Person instructor;

public int[] grades;

Course(String setName, String setEnrollment,

double setAverageGrade, Person setInstructor,

int[] setGrades) {

name = setName;

enrollment = setEnrollment;

averageGrade = setAverageGrade;

instructor = setInstructor;

grades = setGrades;

}

}

Course cs125 = new Course("CS 125", 500, 3.9,

new Person("Geoffrey", 38), new int[] { 4, 4, 3 });{

"name": "CS 125",

"enrollment": 500,

"averageGrade": 3.9,

"instructor": {

"name": "Geoffrey",

"age": 38

},

"grades": [

4,

4,

3

]

}3.2. Parsing JSON

Because JSON is supported by many different programming languages, many web APIs return data in JSON format. The Microsoft Cognitive Services API is one of them. To utilize this data, you must first parse it or deserialize it. The process of converting a Java object—or object in any language—to JSON is called serialization. The reverse process is called deserialization.

Happily, good libraries exist to parse JSON in every programming language. Java is no exception. We have included the Google GSON JSON parsing library in your project for you to use.

One way to use GSON is to create a class that matches your JSON string. So if you were provided with this JSON from a web API:

{

"number": 0,

"caption": "I'm a zero"

}you would design this Java class to represent it:

public class Result {

public int number;

public String caption;

}Note how our classes mirrors both the names (number, caption) and types (int,

String) from the JSON result.

However, when you are working with unfamiliar JSON data, as you are in MP6, we suggest that you not create new classes and instead use the built-in Java classes. Here’s an example of how to do this given the JSON string shown above:

JsonParser parser = new JsonParser();

JsonObject result = parser.parse(jsonString).getAsJsonObject();

int number = result.get("number").getAsInt();

String caption = result.get("caption").getAsString();Note that for MP6 we will not grade any addition class files you add to your

lib directory.

So we suggest you follow our example above 3.

3.3. Example JSON

Here is some example JSON produced by the Microsoft Cognitive Services API. You may want to consult this as you begin work on your image recognition functions.

4. Android

Android is a Java-based framework for building smartphone apps that run on the Android platform. By learning how to build Android apps, your programs can have enormous impact. As of a year ago, Google estimated that there were 2 billion active Android devices. That’s over 25% of people on Earth—and several times more than iOS.

However, Android is also a huge and complex system. It’s easy to feel lost when you are getting started. Our best advice is to just slow down, take a deep breath, and try to understand a bit of what is going on at a time. We’ll try to walk you through a few of the salient bits for MP6 below.

4.1. Logging

Like any other computer program, an important part of developing on Android is

generating debugging output.

On Android, our familiar System.out.println

doesn’t quite work the same way we’re used to.

However, Android has a simple yet powerful logging system.

Unlike System.out.println, logging systems allow you to specify multiple log

levels indicating the kind of output that you are generating.

This allows you to distinguish between, for example, debugging output that might

only be useful during development and a warning message that might indicate a

more serious problem or failure.

The Android logger also allows you to attach a String tag to each message to

help separate them when you are debugging or developing.

So the final syntax of the call to generate a debugging message, for example, is

Log.d(TAG, message).

For more information, watch the screencast above or review Android’s official logging documentation.

Do you need to know this to complete MP6? Probably, since you need to determine what you app is doing or how things are going wrong.

4.2. Activitys

The Android

Activity class

corresponds to a single screen that the user can interact with.

Our simple app contains only one activity, but most apps consist of several:

maybe an activity corresponding to the app’s main screen, another for a

settings dialog, and still others for other parts of the app.

As you might expect, there are two important moments for an activity: when it

appears on the screen, and when it leaves the screen.

Android provides functions that you can override to handle both of these events:

onCreate and onPause.

It is typical for on onCreate method to perform tasks required to make the

activity ready for a user to use, such as configuring buttons and other UI

elements.

For more information watch the screencast above or review

Android’s

official Activity information.

Do you need to know this to complete MP6? No. But you may be confused by the overall app structure if you don’t review it.

4.3. UI Events

Why does code in your app run? In many cases it’s because a user interacted with an activity—clicked a button, entered text into a dialog box, or adjusted an on-screen control. Android provides a way for each app to register handlers than run when various user interface (UI) events take place.

Our app uses these to: * start the open file dialog * start the process of capturing an image from the camera * open the download file input box * rotate the image * and upload it to the Microsoft Cognitive Services API for processing. In the screencast above we show how elements of the user interface are linked programmatically to each specific action.

Do you need to know this to complete MP6? No. But it will be hard to understand how your app works without reviewing it.

4.4. UI Modifications

The flip side of user-initiated actions are responses by the app. The normal way for a smartphone app to communicate with the user is by modifying the UI. Pay closer attention to the apps that you use and you’ll start noticing a lot of this: text boxes and photos that change or display information, progress bars that indicate either waiting or a long-running action like playing music, etc.

In the screencast above we’ll review how to modify your app’s UI in response to user actions—or, in the case of MP6, in response to the results from the Microsoft Cognitive Services API.

Do you need to know this to complete MP6?

Yes!

There are some missing pieces in finishProcessImage waiting for you to

complete.

4.5. Intents

One of the powerful features of Android is the ability for multiple apps to communicate with each other. If your app wants to take a photo, for example, it can ask the camera app to take the photo, rather than implementing a camera itself. If you app wants to open a file, as another example, it can ask the file browser to open the file, rather than implementing a file chooser itself. This both greatly simplifies app development and provides users with a familiar interface for the same actions.

Our MP6 app uses intents to launch the camera and a file browser. You do not need to understand this feature, but feel free to watch the screencast above to learn more. And you find it a fun way to respond to the unexpected appearance of Risk Astley.

Do you need to know this to complete MP6?

No, unless you want to really get isRick right.

4.6. Asynchronous Tasks

One of the core goals of every application, including smartphone apps, is to maintain a responsive user interface. If your app freezes for long periods of time, or even short ones, users will quickly stop using it.

Android accomplishes this by delegating certain slow operations to so-called background tasks. They then run independently of the user interface. So your app can be simultaneously responding to new user input and, for example, downloading a large file.

This is an advanced topic and not one that we expect you to master on this MP or even on future ones. But if you want to learn a bit more, watch the screencast above. Our MP6 app uses two background tasks: one to download files and save them to local storage, the second to make requests to the Microsoft Cognitive Services API.

Do you need to know this to complete MP6? No, but it’s interesting!

4.7. Microsoft Cognitive Services API

What is an API (application programmer interface)? Put it this way—there are computer systems out there that can do really cool things for you. APIs provide a way to request their help, and easily integrate powerful features into your applications. You just have to learn how to use them.

In MP6 we’re using the really cool Microsoft Cognitive Services API to process images. The screencast above will show you how to do that. It also walks through the steps you need to add your Microsoft Cognitive Services API key to your project so that you can make your own requests.

Of course, like any artificial intelligence system, the Microsoft Cognitive Services API is not perfect. We’ve seen it produce some very amusing results. If you find a good one, post it on the forum for us to giggle at. Maybe we’ll turn this into a competition for extra credit.

Do you need to know this to complete MP6?

You don’t need to understand how the API call is made, but you may need to make

a few small changes to Tasks.java and understand the JSON

returned by the Microsoft Cognitive Services API.

And you do need to add your API key so that your API calls work properly.

5. Grading

MP6 is worth 100 points total, broken down as follows:

-

10 points: for submitting code that compiles

-

10 points: for

getWidth -

10 points: for

getHeight -

10 points: for

getFormat -

10 points: for

getCaption -

10 points: for

isADog -

10 points: for

isACat -

10 points: for

isRick -

10 points for no

checkstyleviolations -

10 points for committing code that earns at least 40 points before Wednesday 4/11/2018 @ 5PM.

5.1. Test Cases

As in previous MPs, we have provided test cases for MP6. Please review the MP0 testing instructions.

However, unlike previous MPs we have not provided complete test cases for

MP6.

Specifically, we have not provided a test for isRick.

This is intentional, and designed to force you to do your own local testing.

It is also designed to not give away exactly what features of the JSON returned

by the Microsoft Cognitive Services API you will need to look at to complete isRick.

5.2. Autograding

Like previous MPs we have provided you with an

autograding script that you can use to estimate your current grade as often as

you want.

Please review the MP0 autograding instructions.

However, as described above note that the local test suite will not

test isRick, while the remote test suite will.

6. Submitting Your Work

Follow the instructions from the submitting portion of the CS 125 workflow instructions.

And remember, you must submit something that earns 40 points before Wednesday 4/11/2018 @ 5PM to earn 10 points on the assignment.

7. Errata

Below are a few screencasts designed to help you address common problems with MP6.

7.1. Import Not Found Errors

If you see many Import Not Found Errors in MainActivity.java and

Tasks.java when initially importing the project, please follow the steps shown

in the screencast above.

7.2. Restoring Our Run Configurations

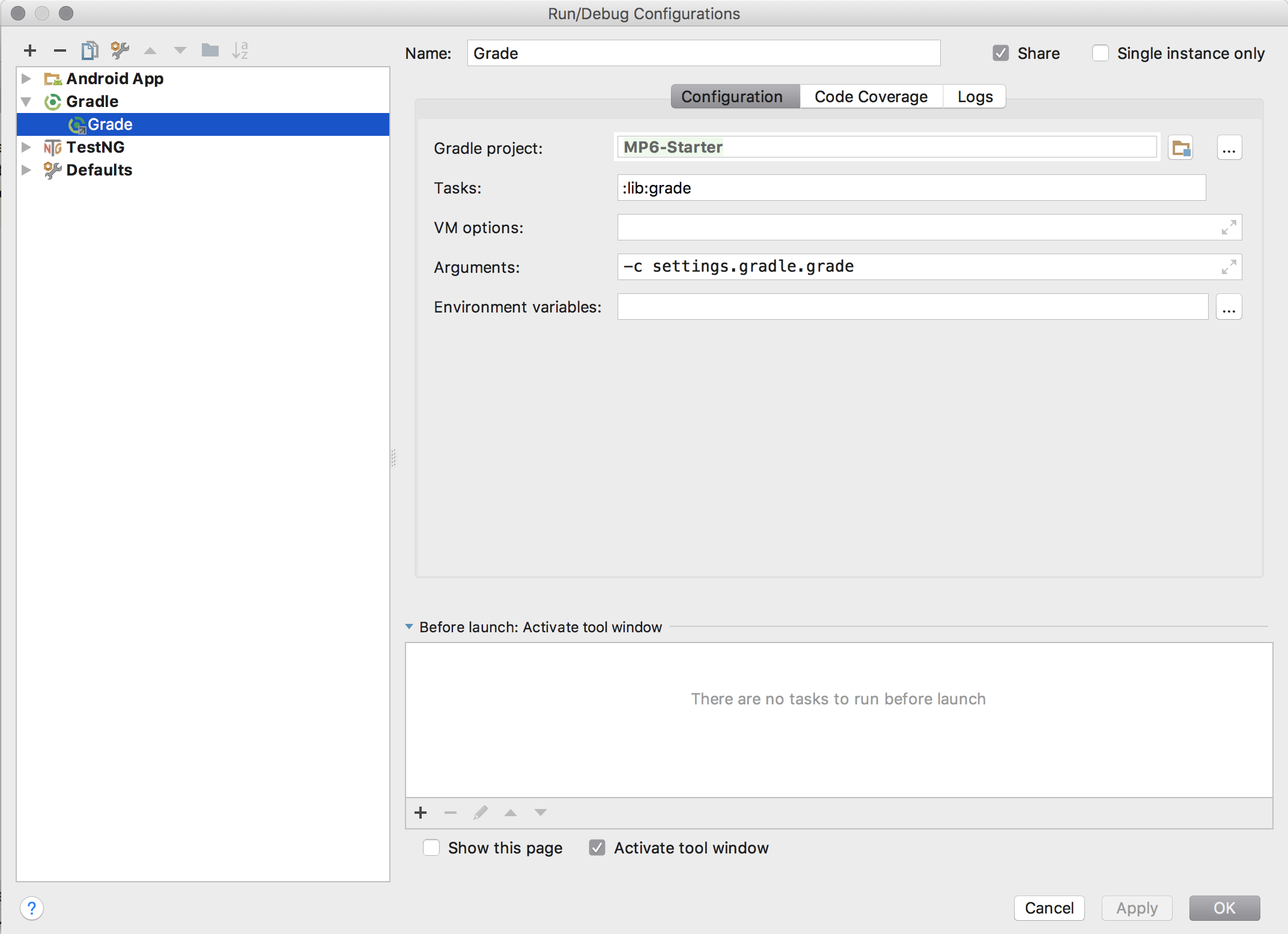

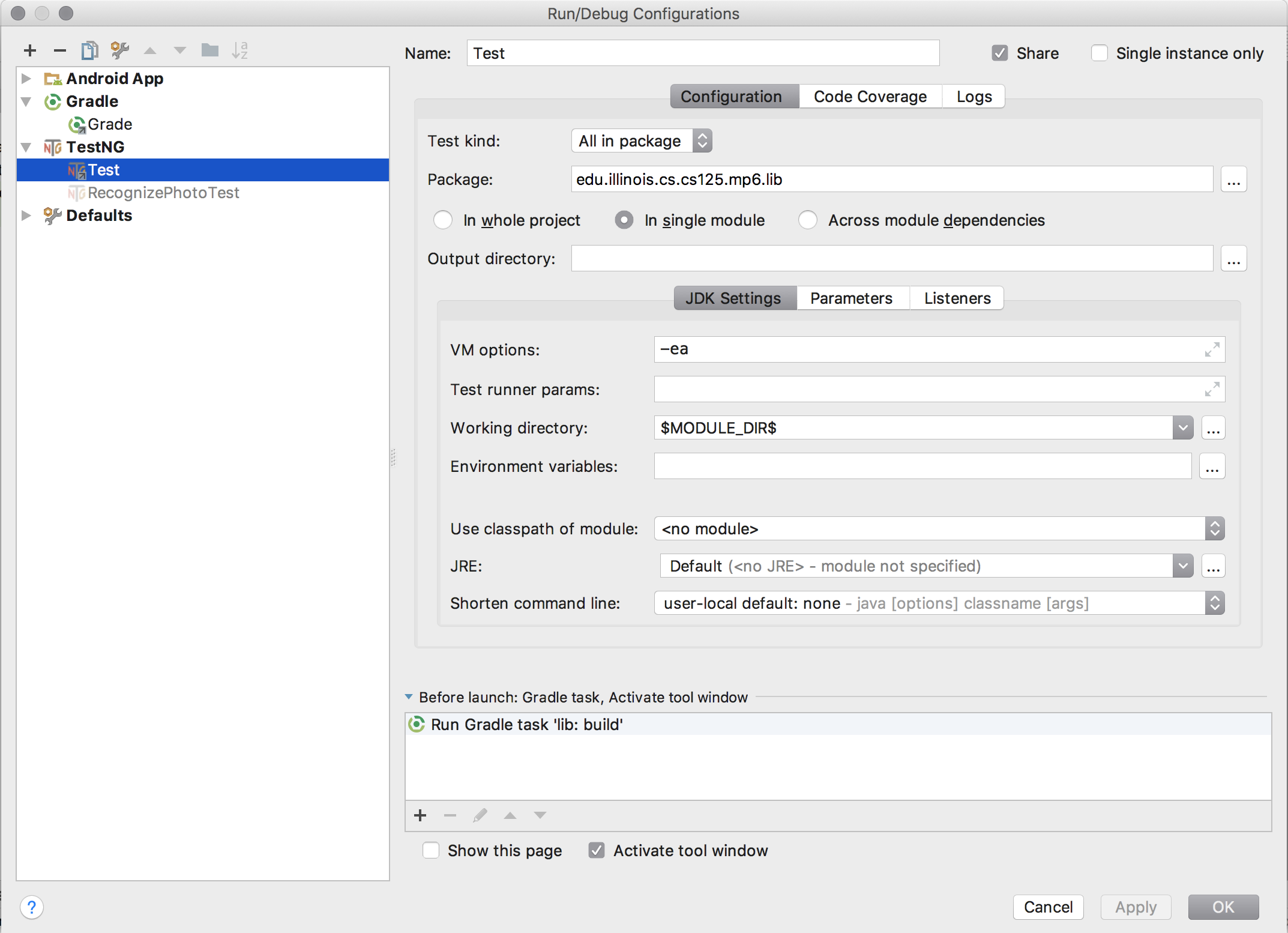

For some reason Android Studio deletes the run configurations that we set up for you when you import the project. We’ve filed a bug against Android Studio to complain about this behavior.

We’ve updated the MP to copy these over when you start, but if they are missing please follow the screencast above for instructions about how to recreate the missing run configurations.

7.3. Testing Problems

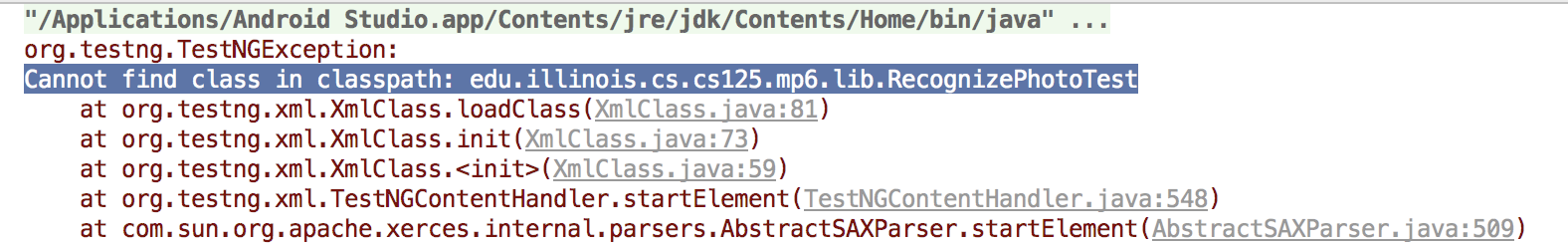

When you try to run the test suite, you may encounter an error like this:

First and foremost, you need to make sure that you’ve added all of the necessary

methods to RecognizePhoto.java.

Open RecognizePhotoTest.java in Android Studio and make sure that no errors

are shown.

To run the test suite the solution is to restore and use the test run configuration as described above. Executing the test suite directly does not seem to work properly on Android Studio.

7.4. Testing Output

Note that, unfortunately, the output from the test suite in Android Studio can

be confusing because Actual and Expected are swapped.

So, for example, if you fail a test and it says:

Expected: null

Actual: "a brown dog"what it means is that you returned null but it was expecting a brown dog.

Apologies for this—we’ll fix it for next year.

(Note that this doesn’t affect the correctness of the test suite, just the

Android Studio output.)

8. Academic Integrity

Please review the MP0 academic integrity guidelines.

If you cheat, we will make your watch this over and over again: